Tutorial¶

A quick tour of what can be done with DeNSE.

A single neuron¶

First, one should import all the modules and variables that are necessary for the simulation:

1import dense as ds

2from dense.units import *

The first line makes the DeNSE simulator available and let us call it through the variable ds. The second line imports all the

units (e.g. time units like ms, minutes…) which will be used to set the properties of the simulation and of the neurons.

Once this is done, we can create our first neuron:

1n = ds.create_neurons()

This creates a default neuron without any neurites (a single soma). Let’s add an axon and a dendrite:

1n.create_neurites(2, names=["axon", "dendrite_1"])

by default, neurons have their has_axon variable set to True, meaning that the first created neurite will be

an axon and all subsequent neurites will be dendrites.

Now that the neurites have been created, they can be accessed through n.axon and n.dendrites (or n.neurites

to get all of them at the same time). We will set the parameters of the dendrite to make it grow more slowly than the

axon.

Because the default name of the first dendrite is "dendrite_1", this reads:

1print("{!r}".format(n.axon))

2print(n.dendrites)

3print(n.neurites)

4

5dprop = {

6 "speed_growth_cone": 0.2*um/minute,

7 "taper_rate": 0.01,

8 "use_uniform_branching": True,

9 "uniform_branching_rate": 0.05*cph

10}

11

12n.axon.set_properties({"speed_growth_cone": 0.5*um/minute})

13n.dendrites["dendrite_1"].set_properties(dprop)

One can then plot and simulate the growth of this neuron:

1''' Plot the initial state '''

2

3ds.plot.plot_neurons()

4

5

6''' Simulate a few days then plot the result '''

7

8ds.simulate(7*day)

9ds.plot.plot_neurons()

Two interacting neurons¶

Again, import all necessary modules and variables:

1import dense as ds

2from dense.units import *

Once this is done, we can set the various parameters for the simulation and the neuronal properties:

1num_neurons = 2

2

3simu_params = {

4

5 "resolution": 1.*minute,

6 "num_local_threads": num_omp,

7 "seeds": [0, 1],

8 "environment_required": False,

9}

10

11neuron_params = {

12 "growth_cone_model": "simple-random-walk",

13 "position": [(0., 0.), (100., 100.)]*um,

14 "persistence_length": 200.*um,

15 "speed_growth_cone": 0.03*um/minute,

16 "taper_rate": 1./400.,

17 "use_uniform_branching": True,

18 "uniform_branching_rate": 0.009*cph,

19}

20

21neurite_params = {

22 "axon": {"initial_diameter": 4.*um},

23 "dendrites": {"taper_rate": 1./200., "initial_diameter": 3.*um}

24}

The first line here declares the number of OpenMP processes that will be used, i.e. how many parallel threads will be used to perform the simulation. The second line will be used to set the number of neurons that will be simulated.

The third part contains the parameters related to the simulation: the timestep used to run the simulation, the number of threads used in the parallel simulation, the random seeds that will be used to generate random numbers (one per OpenMP thread), and whether the neurons are embedded in spatial boundaries.

Eventually, the neuron_params dictionary on line 11 contains the information that will be used to describe the growth of the two neurons, all

expressed with their proper units (distances in microns, speed in micron per minutes, and branching events in ``counts per hour’’).

As no environment was specified here, the positions of the neurons is also specified; there orientation (the direction of the axon) will be set randomly.

Though we used the same parameters for both neurons here, this is not necessary and different parameters can be passed for each neurons through a list,

as shown for the positions on line 15.

Once all these parameters are declared, we can configure DeNSE and create the neurons so that everything is ready for the simulation.

1# configure DeNSE

2ds.set_kernel_status(simu_params)

3

4# create neurons

5n = ds.create_neurons(n=num_neurons, params=neuron_params,

6 neurite_params=neurite_params, num_neurites=2)

As can be seen above, one uses the ds variable to access the simulator main function.

The set_kernel_status() function is used here to transfer the parameters to the kernel of DeNSE (the main simulator units).

Once this is done, the create_neurons() is called in order to obtain two neurons with the specific set of parameters declared

in neuron_params, and two neurites (by default the first is an axon, and the second is a dendrite) which initially possess a single

branch protruding from the soma.

Once the neurons are created one can visualize them using plot_neurons().

The initial condition of the neurons can thus be visualized before starting the simulation.

Following neuron creation, the simulation can be started and its result can be visualized again:

1''' Plot the initial state '''

2

3ds.plot.plot_neurons()

4

5

6''' Simulate a few days then plot the result '''

7

8ds.simulate(7*day)

9ds.plot.plot_neurons()

After this first 7 day simulation, the parameters of the neurons can be changed to account for changes in developmental mechanisms, so that these new parameters can be used to simulate the next part of these cells’ growth.

1''' Change parameters and simulate again '''

2

3# new dendritic and axonal parameters

4axon_params = {

5 "speed_growth_cone": 0.02*um/minute,

6}

7

8dend_params = {

9 "use_uniform_branching": False,

10 "speed_growth_cone": 0.01*um/minute,

11}

12

13neurite_params = {"axon": axon_params, "dendrites": dend_params}

14

15# update the properties of the neurons

16ds.set_object_properties(n, neurite_params=neurite_params)

17

18# simulate and plot again

19ds.simulate(7*day)

20ds.plot.plot_neurons()

Here we changed separately the dendritic and axonal parameters using the set_object_properties() function on the two neurons which are

stored in the n variable (the neurons stored in a Population object).

Once the simulation is over, the shapes of the neurons that were obtained can be saved in standard morphology formats such as SWC or MorphoML (NeuroML).

Note that the neuroml python module is necessary to use save_to_neuroml().

1# In the swc format

2ds.io.save_to_swc("neurons.swc", n)

Multiprocessing and random number generation¶

DeNSE support parallel simulations at the C++ level using OpenMP shared memory thread parallelism. Currently, the number of threads must be set at the beginning of a script, before any objects are created because objects are then allocated to a given thread which will be responsible for it. The number of thread can be set through

ds.set_kernel_status({"num_local_threads": 4})

if you want your simulation to run on four threads. Parallelism is implemented at the neuronal level: all neurites of a given neuron are associated to the same thread. There is therefore no point in allcating a number of threads which would be higher than the total amount of neurons in the simulation.

Warning

For people using simulateously DeNSE and other parallel simulation software, make sure that

you or the software used do not set the OMP_NUM_THREADS variable to a value other than

that used for DeNSE: this would lead to undefined behavior.

Since DeNSE also uses random numbers to make most of the actions associated to growth cone navigation, you can seed the random number generators used during the simulation through:

ds.set_kernel_status({"seeds": [1, 5, 3, 6]})

Note that one seed must be given for each thread. The result of a simulation should always be the same provided the number of threads and seeds used are identical.

Interactions and simulation speed, various tips¶

Simulation speed with DeNSE will vary greatly depending on the time resolution and interactions. By default, DeNSE uses a 1 minute timestep, with neuron-neuron interactions turned on, which can lead to rather long simulation times. To increase speed, several approaches may or may not be attractive to you.

Increasing the time resolution. Using

ds.set_kernel_status({"resolution": 5.*minute}), you can reduce the number of steps necessary to complete the simulation; this also has another advantage because it increases the size of the compartments composing the neuron, thus reducing their number and speeding up the interactions. However, this will obviously make the final results more “crude”, as you subsample the real path of the neurites.Increase the value of the

"growth_threshold_polygon"kernel parameter; this parameter is related to the distance the growth cone must move away from its previous position for a polygon to be generated, at which point other growth cones will be able to interact with this new part of the branch. This brings speedups that are similar to increasing time resolution for the same reason mentioned previously: fewer geometric operations and polygons to deal with. Note that increasing this value could lead to approximations: growth cones that should have interacted may miss one another if you increase this value too far (over a few microns, for instance), though it remains very low for values below a micron.Switch the interactions off using

ds.set_kernel_status({"interactions": False}). Neuron-neuron interactions are currently the main source of CPU requirement and, though we know how we could reduce them further, it is a non-trivial task for which we currently do not have the necessary time. If you are interested an would like to help on that, feel free to contact us.Do not use a spatial environment. Though this leads to lower speed gains compared to the other solutions, it might also help in specific cases.

Embedding neurons in space¶

One of the original aims for DeNSE was the study of neuronal cultures and devices, where the neurons

can be embedded in more or less complex structures.

In the simulator, simple spatial structures such as disks or rectangles can be generated directly

from the Shape object.

For more complex shapes, pre-constructed images in SVG or DXF formats can be

imported using the culture_from_file() function.

1''' Configuring the simulator and the neuronal properties '''

2

3num_omp = 5

4num_neurons = 50

5

6simu_params = {

7 "resolution": 10.*minute,

8 "num_local_threads": num_omp,

9 "seeds": [i for i in range(num_omp)],

10 "environment_required": True,

11 "interactions": False,

12}

13

14# configure DeNSE

15ds.set_kernel_status(simu_params)

16

17

18''' Create and set the environment '''

19

20env = ds.environment.Shape.rectangle(500*um, 500*um)

21

22ds.set_environment(env)

23

Once the environment is created, we can seed randomly the neurons inside it.

1soma_radius = 4.*um

2pos = env.seed_neurons(num_neurons, soma_radius=soma_radius, unit="um",

3 return_quantity=False)

Warning

Setting the soma radius correctly is critical, otherwise the soma might “protrude” out of the environment, which will lead to issues for neurite creation.

We can then create the neurons:

1neuron_params = {

2 "growth_cone_model": "simple-random-walk",

3 "position": [tuple(p) for p in pos]*um,

4 "persistence_length": 200.*um,

5 "speed_growth_cone": 0.03*um/minute,

6 "soma_radius": soma_radius,

7 "use_uniform_branching": True,

8 "uniform_branching_rate": 0.009*cph,

9}

10

11axon_params = {

12 "initial_diameter": 4.*um,

13 "max_arbor_length": 1.*cm,

14 "taper_rate": 1./400.,

15 "initial_diameter": 4.*um,

16}

17

18dend_params = {

19 "initial_diameter": 3.*um,

20 "max_arbor_length": 500.*um,

21 "taper_rate": 1./200.,

22 "initial_diameter": 3.*um,

23}

24

25neurite_params = {"axon": axon_params, "dendrite": dend_params}

26

27# create neurons

28n = ds.create_neurons(n=num_neurons, params=neuron_params,

29 neurite_params=neurite_params,

30 num_neurites=2)

And simulate:

1ds.simulate(4*day)

2ds.plot.plot_neurons(scale_text=False)

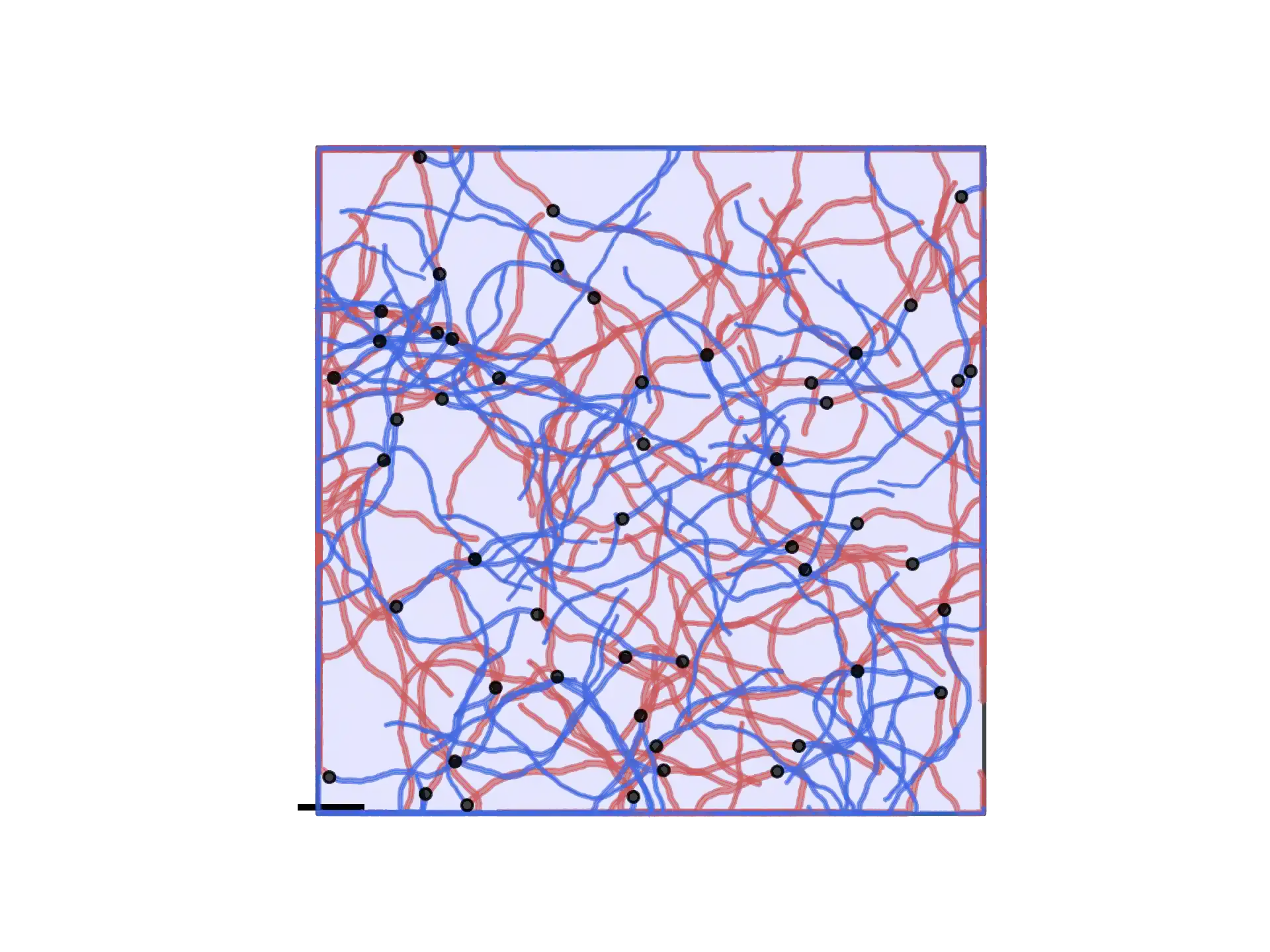

Which leads to the following structure:

Complex neurons and parameters (Acimovic2011)¶

If you want to make a full neuronal network, you will probably want to specify specific neuronal shapes that can vary depending on the neurons. In this tutorial file, we show how to reproduce a study [Acimovic2011] using DeNSE.

This focuses especially on reproducing the shape of pyramidal neurons, with their specific neurite orientations and having varying number of neurites.

To do so, we start by defining the orientations for all possible neurites (between 4 and 6, with always at least an axon, an apical, and 2 basal dendrites) as well as their names.

1'''

2Neuronal parameters

3'''

4

5num_neurons = 100

6num_pyr = 80

7num_nonpyr = 20

8

9density = 100 / (um**2)

10radius = 564.2*um

11

12soma_diam = 10.*um

13

14neuron_params = {

15 "growth_cone_model": "simple-random-walk",

16 "soma_radius": 0.5*soma_diam,

17 "use_van_pelt": True,

18 "persistence_length": 100.*um,

19 "random_rotation_angles": True,

20}

21

22# pyramidal parameters

23

24neurite_angles = {

25 "axon": 0.*deg,

26 "apical": 180.*deg,

27 "basal_1": 75.*deg,

28 "basal_2": -75.*deg,

29 "basal_4": 45.*deg,

30 "basal_5": -45.*deg

31}

32

33names = list(neurite_angles)

The combination of "random_rotation_angles": True and the specific angles

means that all neurites will be randomly rotated “as a block” for each neuron.

Then we generate a random number of neurites for each neuron and generate the associated names:

1pyr_num_neur = rng.integers(4, 6, num_pyr, endpoint=True)

2

3neurite_names = [names[:n] for n in pyr_num_neur]

Because simulating many neurons can take time, in this example we set the kernel parameters so that neuron-neuron interactions are ignored and the progress of the simulation is printed:

1kernel_params = {

2 "resolution": resolution,

3 "num_local_threads": num_omp,

4 "interactions": False,

5 "print_progress": True

6}

7

8ds.set_kernel_status(kernel_params)

Finally we set the parameters and create the neurons:

1pyr_params = neuron_params.copy()

2pyr_params["neurite_angles"] = neurite_angles

3

4pyr = ds.create_neurons(num_pyr, pyr_params, num_neurites=pyr_num_neur,

5 neurite_names=neurite_names,

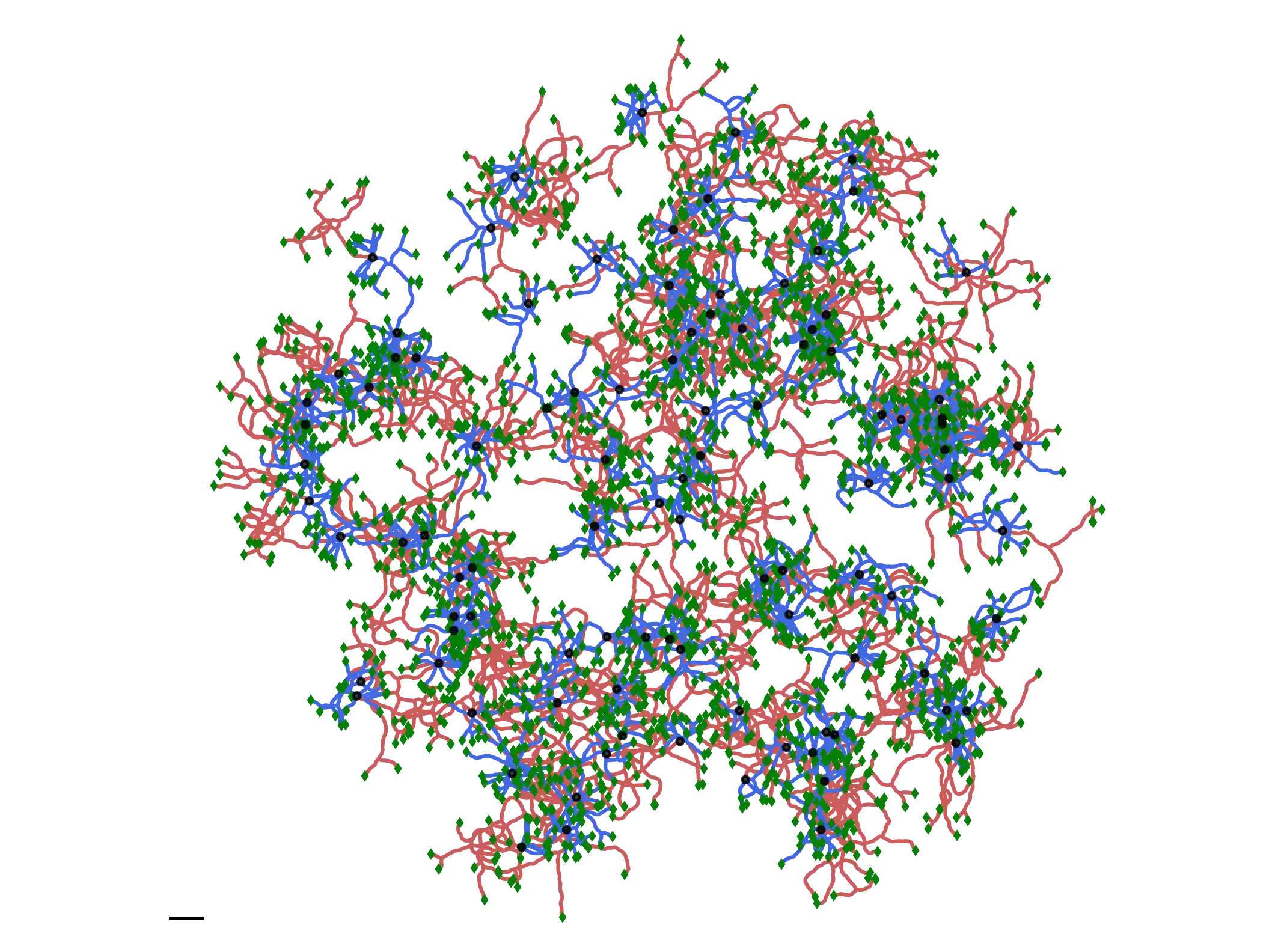

If you run the whole file, you will get the following structure (scale bar is 50 \(\mu m\)):

Other Examples¶

The examples/ folder contains a collections of different applications cases

illustrating DeNSE functions.

Models¶

examples/models/ contains examples concerning growth models.

examples/models/neurons/ contains a collections of DeNSE models built to

generate various neuronal shapes : pyramidal cells, Purkinje cells, multipolar

cells, chandelier cells, starbus amacrine, etc… The models were hand tuned for

a qualitative resemblance with the corresponding cell types.

examples/models/neurons/ is an example code for importing and

displaying a morphology file in neurom format.

examples/models/competition/ illustrates resource-based elongation models.

examples/models/branching/ shows codes to explore the branching models.

examples/models/random_walks/

Contains a code to run successively different simulations with different persistence lengths for a random walk base growth cone model.

Space Embedding¶

examples/space_embedding/

contains examples to make neurons grow in spatially bounded regions:

polygons/shows an example of multiple neurons growing in a space limited region containing obstacles. The connectivity network is generated at the end of the simulation.patterns/illustrates growing of neurons in a complex geometry.ordered_neurons/shows a code to generate neurons placed at precise locations and with controlled initial orientation of dendrites.droplets/shows a code to generate a neuronal network in a complex geometry.

examples/multi_chambers/

is a special case of space embedding, where the culture is made of different

chambers that communicate through channels of different shapes.

Growth Studies¶

examples/growth_studies/

contants different applications how to study network growth:

turning_angles/, where neurons arrive on an obstacle with different inclinationturning_walls_exp/, where neurons arrive in a chamber with different angles of the opening funnelrecorders/shows the usage of recorders

References¶

- Acimovic2011

J. Aćimović, T. Mäki-Marttunen, R. Havela, H. Teppola, and M.-L. Linne (2011). Modeling of Neuronal Growth In Vitro: Comparison of Simulation Tools NETMORPH and CX3D. EURASIP Journal on Bioinformatics & Systems Biology, 616382.